Introduction

Assignar team has been working hard on moving away from server-based architectures in order to reduce, and eliminate, IT operational tasks that are required to keep them up and running.

Many companies are already gaining benefits from running applications in the public cloud, including cost savings from pay-as-you-go billing and improved agility through the use of on-demand IT resources. Multiple studies across application types and industries have demonstrated that migrating existing application architectures to the cloud lowers total cost of ownership (TCO) and improves time to market.

Relative to on-premises and private cloud solutions, the public cloud makes it significantly simpler to build, deploy, and manage fleets of servers and the applications that run on them. However, companies today have additional options beyond classic server or VM-based architectures to take advantage of the public cloud. Although the cloud eliminates the need for companies to purchase and maintain their own hardware, any server-based architecture still requires them to architect for scalability and reliability. Plus, companies need to own the challenges of patching and deploying to those server fleets as their applications evolve. Moreover, they must scale their server fleets to account for peak load and then attempt to scale them down when and where possible to lower costs—all while protecting the experience of end users and the integrity of internal systems. Idle, underutilised servers prove to be costly and wasteful. Analysts estimate that as many as 85 percent of servers in practice have underutilised capacity.

Serverless compute services like AWS Lambda are designed to address these challenges by offering companies a different way of approaching application design, one with inherently lower costs and faster time to market. AWS Lambda eliminates the complexity of dealing with servers at all levels of the technology stack, and introduces a pay-per-request billing model where there are no more costs from idle compute capacity. Additionally, Lambda functions enable organisations to easily adopt microservices architectures. Eliminating infrastructure and moving to a Lambda model offers dual economic advantages:

- Problems like idle servers simply cease to exist, along with their economic consequences. A serverless compute service like AWS Lambda is never “cold” because charges only accrue when useful work is being performed, with millisecond-level billing granularity

- Fleet management (including the security patching, deployments, and monitoring of servers) is no longer necessary. This means that it isn’t necessary to maintain the associated tools, processes, and on-call rotations required to support 24×7 server fleet uptime. Using Lambda to build microservices helps organisations be more agile. Without the burden of server management, companies can direct their scarce IT resources to what matters—their business.

With greatly reduced infrastructure costs, more agile and focused teams, and faster time to market, companies that have already adopted serverless approaches are gaining a key advantage over their competitors.

Understanding Serverless Applications

The advantage of the serverless approach cited above is appealing, but what are the considerations for practical implementation? What separates a serverless app from its conventional server-based counterpart?

Serverless apps are architected such that developers can focus on their core competency—writing the actual business logic. Many of the app’s boilerplate components, such as web servers, and all of the undifferentiated heavy lifting, such as software to handle reliability and scaling, are completely abstracted away from the developer. What’s left is a clean, functional approach where the business logic is triggered only when required: a mobile user sending a message, an image uploaded to the cloud, records arriving in a stream, and so forth. An asynchronous, event-based approach to application design—while not required—is very common in serverless applications, because it dovetails perfectly with the concept of code that runs (and incurs cost) only when there is work to be done.

A serverless application runs in the public cloud, on a service such as AWS Lambda, which takes care of receiving events or client invocations and then instantiates and runs the code. This model offers a number of advantages compared with conventional server-based application design:

- There is no need to provision, deploy, update, monitor, or otherwise manage servers. All of the actual hardware and server software is handled by the cloud provider.

- The application scales automatically, triggered by its actual use. This is inherently different from conventional applications, which require a receiver fleet and explicit capacity management to scale to peak load.

- In addition to scaling, availability and fault tolerance are built in. No coding, configuration, or management is required to gain the benefit of these capabilities.

- There are no charges for idle capacity. There is no need (and in fact, no ability) to pre-provision or over-provision capacity. Instead, billing is pay-per-request and based on the duration it takes for code to run.

Serverless Application Use Cases

The serverless application model is generic, and applies to almost any type of application from a startup’s web app to a Fortune 100 company’s stock trade analysis platform. Here are a few examples:

- Web apps and websites – Eliminating servers makes it possible to create web apps that cost almost nothing when there is no traffic, while simultaneously scaling to handle peak loads, even unexpected ones.

- Mobile backends – Serverless mobile backends offer a way for developers who focus on client development to easily create secure, highly available, and perfectly scaled backends without becoming experts in distributed systems design.

- Media and log processing – Serverless approaches offer natural parallelism, making it simpler to process compute-heavy workloads without the complexity of building multithreaded systems or manually scaling compute fleets.

- IT automation – Serverless functions can be attached to alarms and monitors to provide customisation when required. Cron jobs and other IT infrastructure requirements are made substantially simpler to implement by removing the requirement to own and maintain servers for their use, especially when these jobs and requirements are infrequent or variable in nature.

- IoT backends – The ability to bring any code, including native libraries, simplifies the process of creating cloud-based systems that can implement device-specific algorithms.

- Chatbots (including voice-enabled assistants) and other webhook-based systems – Serverless approaches are a perfect fit for any webhook-based system, like a chatbot. Their ability to perform actions (like running code) only when needed (such as when a user requests information from a chatbot) makes them a straightforward and typically lower-cost approach for these architectures. For example, the majority of Alexa Skills for Amazon Echo are implemented using AWS Lambda.

- Clickstream and other near real-time streaming data processes – Serverless solutions offer the flexibility to scale up and down with the flow of data, enabling them to match throughput requirements without the complexity of building a scalable compute system for each application. When paired with a technology like Amazon Kinesis, AWS Lambda can offer high-speed processing of records for clickstream analysis, NoSQL data triggers, stock trade information, and more.

In addition to the highly adopted use cases discussed earlier, companies are also applying serverless approaches to the following domains:

- Big data, such as map-reduce problems, high speed video transcoding, stock trade analysis, and compute-intensive Monte Carlo simulations for loan applications. Developers have discovered that it’s much easier to parallelise with a serverless approach,3 especially when triggered through events, leading them to increasingly apply serverless techniques to a wide range of big data problems without the need for infrastructure management.

- Low latency, custom processing for web applications and assets delivered through content delivery networks. By moving serverless event handing to the edge of the internet, developers can take advantage of lower latency, and the ability to customise retrievals and content fetches easily. This enables a new spectrum of use cases that are latency-optimised based on the client’s location.

- Connected devices, enabling serverless capabilities such as AWS Lambda functions to run inside commercial, residential, and hand-held Internet of Things (IoT) devices. Serverless solutions such as Lambda functions offer a natural abstraction from the underlying physical (and even virtual) hardware, enabling them to more easily transition from the data centre to the edge, and from one hardware architecture to another, without disrupting the programming model.

- Custom logic and data handling in on-premises appliances such as AWS Snowball Edge. Because they decouple business logic from the details of the execution environment, serverless applications can easily function in a wide variety of environments, including on an appliance.

Typically, serverless applications are built using a microservices architecture in which an application is separated into independent components that perform discrete jobs. These components, which are made up of individual Lambda functions along with APIs, message queues, database, and other components, can be independently deployed, tested, and scaled. In fact, serverless applications are a natural fit for microservices because of their function-based model. By avoiding monolithic designs and architectures, organisations can become more agile because developers can deploy incrementally and replace or upgrade individual components, such as the database tier, if needed.

In many cases, simply isolating the business logic of an application is all that’s required to convert it into a serverless app. Services like AWS Lambda support popular programming languages and enable the use of custom libraries. Long-running tasks are expressed as workflows composed of individual functions that operate within reasonable time frames, which enables the system to restart or parallelise individual units of computation as required.

Is Serverless Always Appropriate?

Almost all modern applications can be modified to run successfully, and in most cases in a more economical and scalable fashion, on a serverless platform. However, there are a few times when serverless is not the best choice:

- When the goal is explicitly to avoid making any changes to an application.

- When fine-grained control over the environment is required, such as specifying particular operating system patches or accessing low-level networking operations, in order for the code to run properly.

- When an on-premises application hasn’t been migrated to the public cloud.

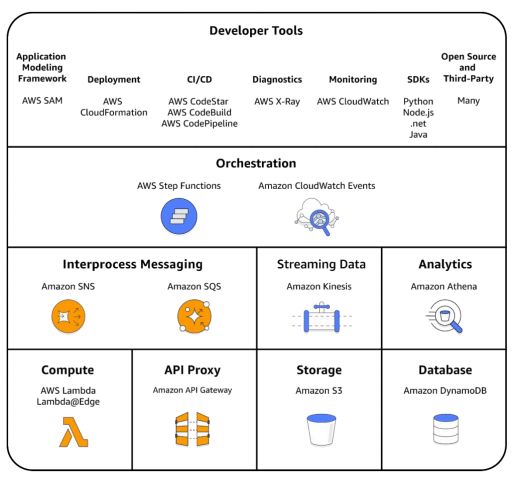

Evaluating a Cloud Vendor’s Serverless Platform

When architecting a serverless application, companies and organisations need to consider more than the serverless compute functionality that executes the app’s code. Complete serverless apps require a broad array of services, tools, and capabilities spanning storage, messaging, diagnostics, and more. An incomplete or fragmented serverless portfolio from a cloud vendor can be problematic for serverless developers, who might have to return to server-based architectures if they can’t successfully code at a consistent level of abstraction. A serverless platform consists of the set of services that comprise the serverless app, such compute and storage components, as well as the tools needed to author, build, deploy, and diagnose serverless apps. Running a serverless application in production requires a reliable, flexible, and trustworthy platform that can handle the demands of small startups to global, world-wide corporations. The platform must scale all of an application’s elements and provide end-to-end reliability. Just as with conventional apps, helping developers succeed in creating and delivering serverless solutions is a multidimensional challenge. To meet the needs of large-scale enterprises across a variety of industries, a serverless platform should offer the capabilities in the following illustration.

- A high-performance, scalable, and reliable cloud logic layer.

- Responsive first-party event and data sources and simple connectivity to third-party systems.

- Integration libraries that enable developers to get started easily, and to add new patterns quickly and safely to existing solutions.

- A vibrant developer ecosystem that helps developers discover and apply solutions in a variety of domains, and for a wide set of third-party systems and use cases.

- A collection of fit-for-purpose application modeling frameworks.

- Orchestration offering state and workflow management

- Global scale and broad reach that includes assurance program certification.

- Built-in reliability and at-scale performance, without the need to provision capacity at any level of scale.

- Built-in security along with flexible access control for both first-party and third-party resources and services.

At the core of any serverless platform is the cloud logic layer responsible for running the functions that represent business logic. Because these functions are often executed in response to events, simple integration with both first-party and third-party event sources is essential to making solutions simple to express and enabling them to scale automatically in response to varying workloads. For example, serverless functions may need to execute each time an object is created in an object store or for each update made to a serverless NoSQL database. Serverless architectures eliminate all of the scaling and management code typically required to integrate such systems, shifting that operational burden to the cloud vendor.

Developing successfully on a serverless platform requires that a company be able to get started easily, including finding ready-made templates for common use cases, whether they involve first-party or third-party services. These integration libraries are essential to convey successful patterns—such as processing streams of records or implementing webhooks—especially during the period when developers are migrating from server-based to serverless architectures. A closely related need is a broad and diverse ecosystem that surrounds the core platform. A large, vibrant ecosystem helps developers readily discover and use solutions from the community and makes it easy to contribute new ideas and approaches. Given the variety of toolchains in use for application lifecycle management, a healthy ecosystem is also necessary to ensure that every language, IDE, and enterprise build technology has the runtimes, plugins, and open source solutions necessary to integrate the building and deploying of serverless apps into existing approaches. It’s also critical for serverless apps to leverage existing investments, including developers’ knowledge of frameworks such as Express and Flask and popular programming languages. A broad ecosystem provides important acceleration across domains and enables developers to repurpose existing code more readily in a serverless architecture.

Application modeling frameworks, such as the open specification AWS Serverless Application Model (AWS SAM) enable a developer to express the components that make up a serverless app, and enable the tools and workflows required to build, deploy, and monitor those applications. Another framework that is key to the success of a serverless platform is orchestration and state management. The largely stateless nature of serverless computing requires a complementary mechanism for enabling long-running workflows. Orchestration solutions enable developers to coordinate the multiple, related application components that are typical in a serverless application, while still enabling those applications to be composed of small and short-lived functions. Orchestration services also simplify error handling and provide integration with legacy systems and workflows, including those that run for longer than serverless functions themselves generally permit.

To support customers worldwide, including multinational corporations with global reach, a serverless platform must offer global scale, including data centers and edge locations located worldwide. Edge locations are key to bringing low-latency serverless computing close to end users. Because the platform, rather than the application developer, is responsible for supplying the scalability and high availability of serverless apps, its intrinsic reliability is critical. Features like built-in retries and dead-letter queues for unprocessed events help developers to construct robust systems with end-to-end reliability using serverless approaches. Performance is equally key, especially low latency (overhead), given that language runtimes and customer code are instantiated on demand in a serverless app.

Finally, the platform must have a broad array of security and access controls, including support for virtual private networks, role-based and access-based permissions, robust integration with API-based authentication and access control mechanisms (including third-party and legacy systems), and support for encrypting application elements, such as environment variable settings. Serverless systems, by their design, offer an inherently higher level of security and control for the following reasons:

- First-class fleet management, including security patching – In a system like AWS Lambda, the servers that execute requests are constantly monitored, cycled, and security scanned. They can be patched within hours of key security update availability, as opposed to many enterprise compute fleets that can have much looser SLAs for patching and updating.

- Limited server lifetimes – Every machine that executes customer code in AWS Lambda is cycled multiple times per day, limiting its exposure to attack and ensuring constantly up-to-date operating system and security patching.

- Per-request authentication, access control, and auditing – Every compute request executed on AWS Lambda, regardless of its source, is individually authenticated, authorized to access specified resources, and fully audited. Requests arriving from outside of AWS data centres via Amazon API Gateway provide additional internet-facing defence systems, including DoS attack defences. Companies migrating to serverless architectures can use AWS CloudTrail to gain detailed insight into which users are accessing which systems with what privileges, and they can use AWS Lambda to process the audit records programmatically.

The AWS Serverless Platform

Since the introduction of Lambda in 2014, AWS has created a complete serverless platform. It has a broad collection of fully managed services that enable organisations to create serverless apps that can integrate seamlessly with other AWS services and third-party services. Figure illustrates a subset of the components in the AWS serverless platform and their relationships.